A NAS tale

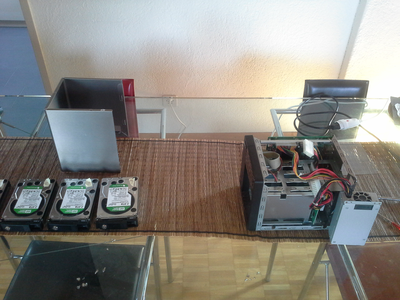

In August 2009 I decided it was time to replace my old Pentium II serving 5 old SUN storage disks (the white boxes, enormously noisy, for those who remember) with a modern NAS system. I bought a QNAP TS-439 Pro. The integrated firmware (aka customized Linux) gave me the creeps from a software design point of view, but it did the job.

Almost exactly a year later there was a fatal event and my software RAID (RAID 5) decided not to assemble anymore. I thought, well, the hardware is pretty standard, why not give Centos a try. Worked well till…

After two and a half years there was Centos 5.9. It introduced a fatal NFS bug which made you see only half of your data (something with caching of inodes). So I turned my back on Centos (on the NAS, not otherwise).

In 2013 I finally installed Archlinux (after a rather disastrous experience with Ubuntu 12.04 LTS). Ubuntu was not happy with the 1 GB of RAM and NFS just performed poorly.

After 4 years I can say, there is nothing wrong with having a bleeding edge Linux distribution like Arch Linux on a NAS. I can recommend using the LTS kernel though as you may end up in trouble with the bleeding edge one from time to time.

In summer 2015 I had the brilliant idea to move the now a little bit noisy NAS into a cabinet under a hot tin roof (sounds stupid, right?). Of course, the PSU went belly up, the DOM (small flash drive to boot via an HDA connector) melted. The PSU model (PSU FSP220-60LE) was a little bit tricky to get and the replacement DOM was just about 2 millimeters too high and needed a good squeeze to fit into the case. The layout in the box is quite cramped (it’s very small and cubic in the end), and I was really happy I didn’t have to replace the motherboard.

The real issue was a sector bug on the software RAID. This is a case software RAID usually doesn’t handle well and where you are better off with a hardware RAID. I had to ‘dd’ the block data manually and reallocate sectors on the disks.

Another real problem is having only 1 GB of RAM. Seems ‘fsck’ on 4 TB data uses just a little bit too many data structures in memory to be able to check the whole filesystem. There is a mode of fsck, which works with small temporary files on disk instead of in memory, but it’s very slow and besides, who wants to check a filesystem storing temporary files on the same physical disks as where he is doing the fsck on?

Since then, no more incidents occurred and the machine is working reliably, though the fan is a little bit noisy and could do with a little bit of cleaning…